Live streaming technology - Past, Present and Future

NPU processors and AI technology

This is the first article in a series that take a closer look at the underlying technologies that power the world of live streaming and content creation

It may seem like live streaming has been done the exact same way for the past many years. However, under the hood, new hardware capabilities, encoding technologies, and technical innovations have been constantly shifting the underlying landscape. When I joined XSplit back in 2012, live streaming was just starting to gain traction. Since then, I've had a front-row seat to the incredible technological advancements that have transformed and are continuing to transform the industry.

One of the most significant shifts we've seen is the move from CPU-based encoding to hardware encoding on the GPU. This change wasn't just a minor upgrade; it was a revolution as it made streaming accessible to the masses by no longer requiring a prohibitively expensive streaming rig with a beefy CPU to produce a high-quality HD live stream. Big players like Intel, Nvidia, and AMD all rolled out their own dedicated encoders, recognizing the profound impact live content creation was beginning to have on our digital world.

A new piece of chip architecture is now changing the landscape yet again, with the advent of NPUs (Neural Processing Units). These chips are game changers for AI-driven tasks, which can now run more smoothly without bogging down your CPU or GPU. Intel is a driving force for this shift, as they are integrating NPUs into all new laptop CPUs and most desktop CPUs to bring their AI PC vision to life.

For content creators, this will make it even more accessible in the future to take advantage of AI-based enhancements to the creator experience, such as AI-driven background removal, audio enhancements, gaming highlights recognition, subtitles, automated reactions and much more, without negatively impacting the gaming or the streaming quality.

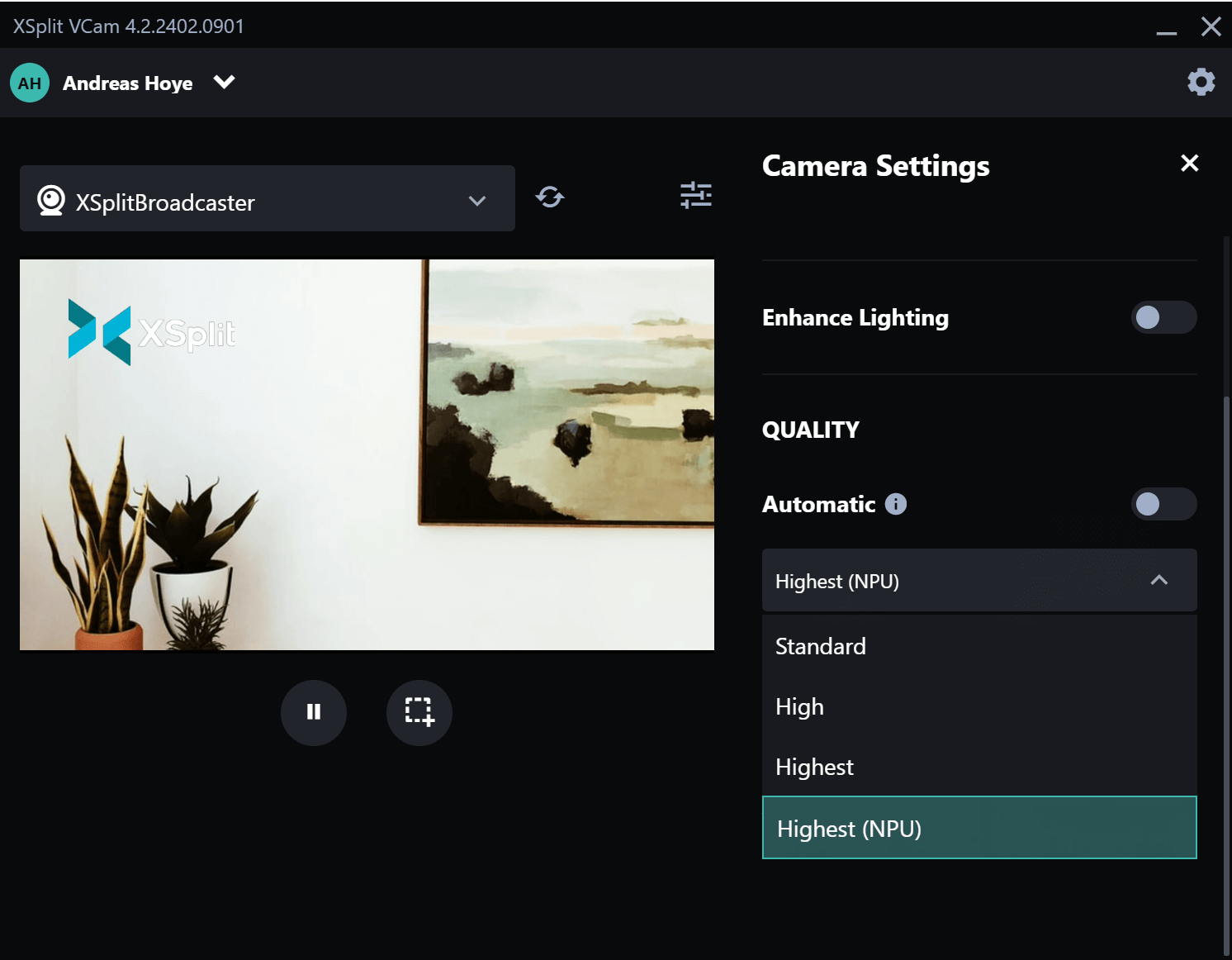

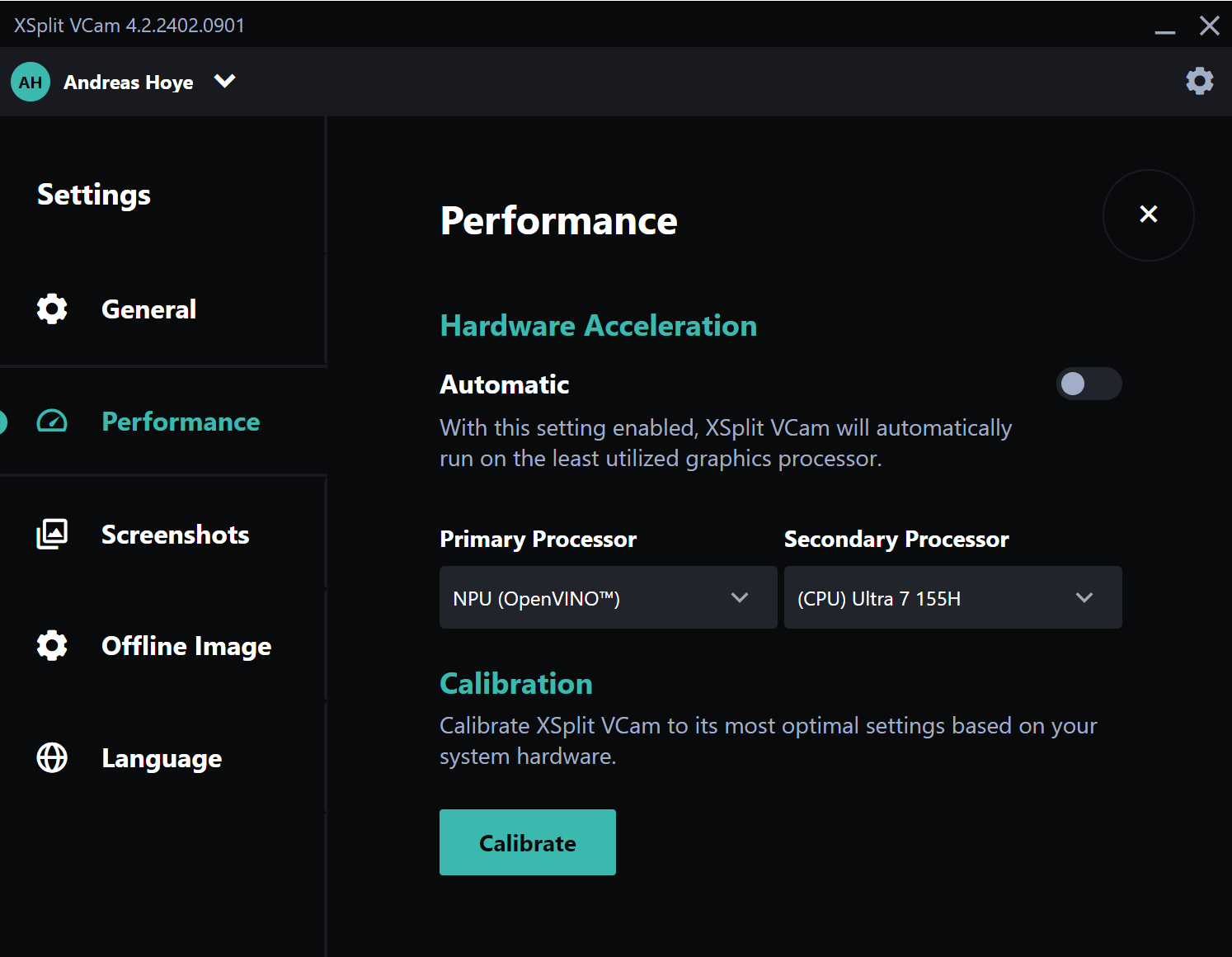

At XSplit, we've leveraged the power of NPUs in exciting ways. We teamed up with Intel to enhance our VCam software, which uses our own patented AI technology for webcam background removal. When running on a machine with an NPU, e.g. any machine with the new Intel Core Ultra processor, VCam now offers a more powerful model that delivers noticeably better results—particularly with tricky details like chair edges, headsets or when someone else suddenly moves in the background.

The performance boost is clear: when measured against a perfect removal of all background elements, our previous best AI model has an average inaccuracy rate of 2.5 percent, but with the new NPU-optimized model, that drops to just 1.5 percent - a 40% reduction of inaccuracies. While the inaccuracy rate may sound negligible in either case, this improvement makes a real difference in live streaming, where clarity and visual quality can make or break viewer engagement.

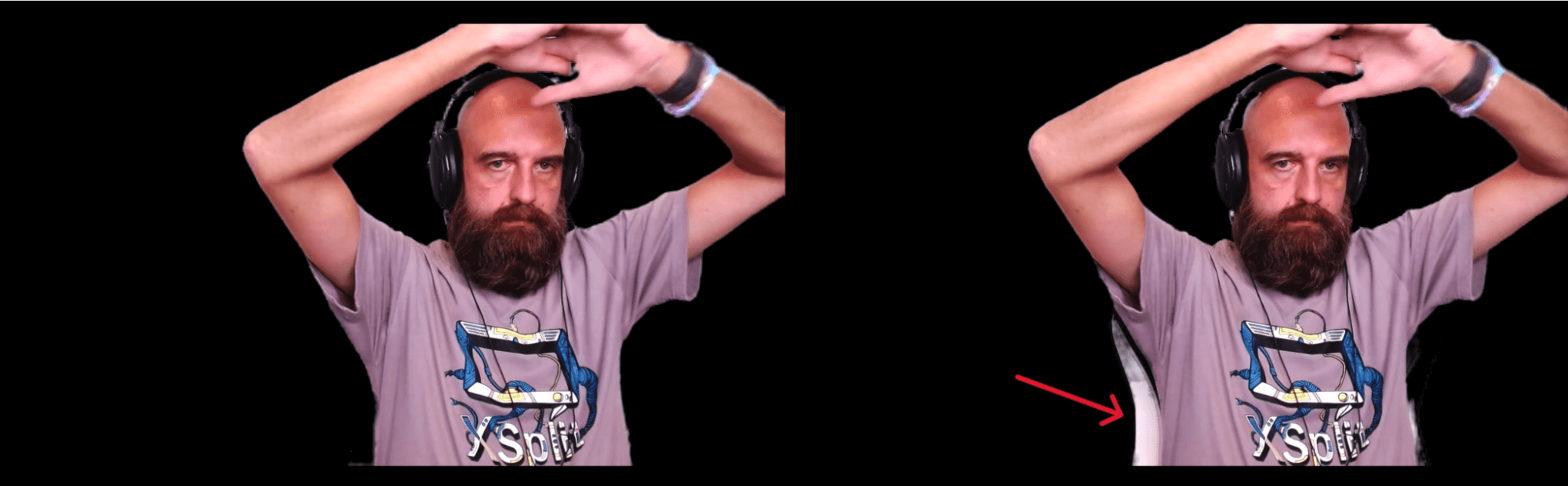

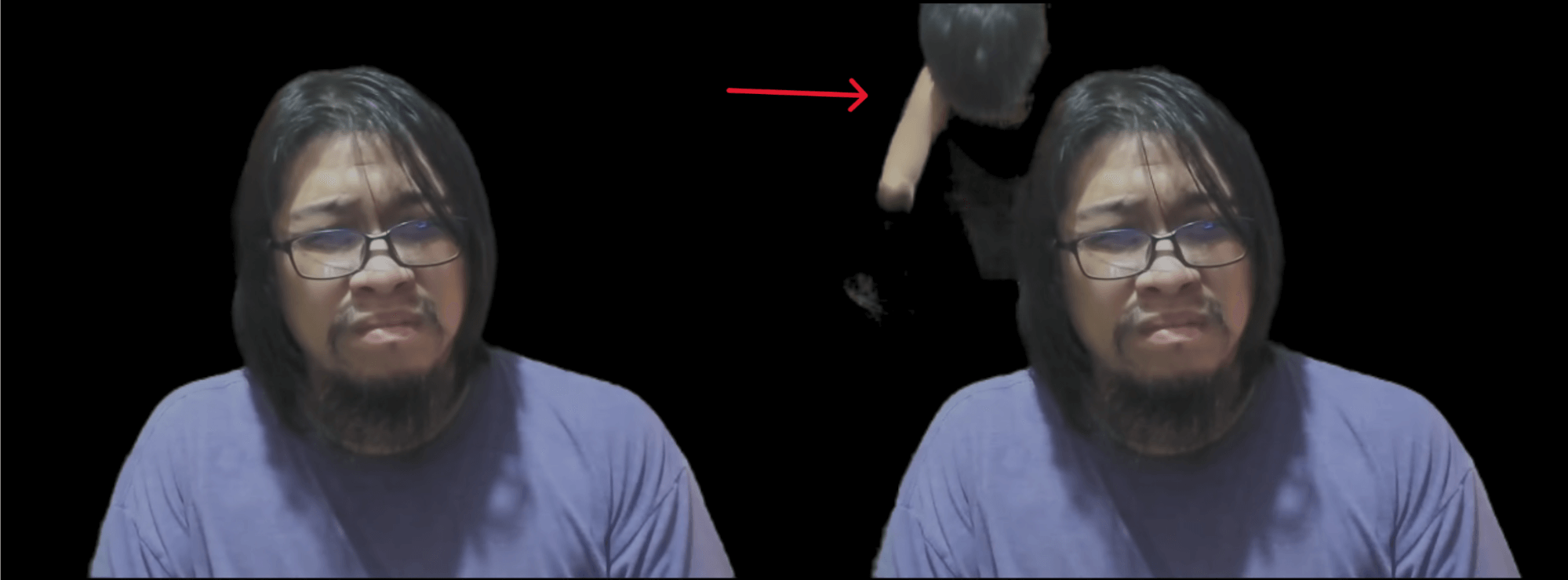

Examples:

Higher level of precision when segmenting edge elements like chair and headphones

Higher level of precision when segmenting edge elements like chair and headphones Significantly reduced risk of model confusion when a person moves in the background

Significantly reduced risk of model confusion when a person moves in the backgroundHere's how it plays out in real life: We ran a series of live tests on an ASUS Zenbook with an Intel Core Ultra 7 processor with NPU and an Intel Arc graphics card. We benchmarked the impact on FPS when playing popular games like Cyberpunk 2077, Sims 4 and Fortnite while encoding the gameplay in 720p in XSplit Broadcaster, at the same time as running the highest-tier background removal models on both the CPU and NPU.

The results were impressive. The games ran smoothly, with only a minimal impact on frame rates when the NPU took on the heavy lifting for background processing.

In these scenarios, when running the previous best background removal model on the NPU, we saw an average of ~13% higher FPS in the games compared to running the model on the CPU. Conversely, when running the new higher NPU-optimized performance model on the CPU, it would bring the game to a constant stutter - while running the new model on the NPU would give a smooth experience with 7.5% higher average FPS than running the previous best model on the CPU.

Background removal model | Processor | Average FPS |

Previous Best | CPU | 95.2 |

Previous Best | NPU | 107.5 |

New Best (NPU-optimized) | CPU | N/A (constant stutter) |

New Best (NPU-optimized) | NPU | 102.3 |

We also saw significant power savings and battery life improvements to be gained when running any of the background removal AI models on the NPU.

VCam uses Intel's OpenVINO API for inference on CPU and NPU. By default, inference in VCam uses FP16 precision on NPU and GPU, and Int8 quantization on CPU. In a future update, VCam may switch to use Int8 also on NPU for even lower power usage.

VCam optimizes inference cycles when the camera has little or no movement, but by using our in-house model testing tool we were able to measure worst case power consumption used by the background removal model by forcing inference on every frame and testing different models with different precisions.

Previous | *Package Power | New | *Package Power |

CPU (Int8) | 22.90 | CPU (Int8) | 28.19 |

GPU (FP16) | 16.23 | GPU (FP16) | 20.72 |

NPU (FP16) | 13.05 | NPU (FP16) | 14.21 |

NPU (Int8) | 11.24 | NPU (Int8) | 12.23 |

In conclusion, using the NPU for background inference reduced the battery consumption by up to 60% compared to inferencing on the CPU/GPU, and running the new higher model on the NPU only increases power consumption by ~8% compared to running the previous best model on the NPU. Overall, this means both longer battery life and lower energy bills—a win-win for streamers and the planet.

Looking forward, the potential of NPUs in content creation is enormous. We're just scratching the surface of what's possible in terms of real-time video analytics, automated moderation, and personalized interactions. Imagine a live stream that adapts on the fly to viewer reactions and comments, creating a truly interactive experience.

In this series, we'll continue exploring the technologies shaping the past, present and future of live streaming. Stay tuned for more insights into how these innovations are redefining not just how we create content, but how we connect with the world.